In light of the newest generation of consoles being fully(ish) available, it marks a good point to start talking about how audio will change over this generation. From the talks with Mark Cerny, to Epic unveiling their new engine, the future of game audio is almost palpable. Some of the biggest changes will be in audio processing or transforming of audio signals, otherwise known as DSP. This has become possible because both Playstation and Xbox have dedicated a significant amount of hardware to do the complex audio calculation in real-time. Some of the terms you might have heard are things like HRTFs, convolution reverb, and ambisonics. Let’s dive into each term, and explore how they will change the future of game audio and game design.

HRTF

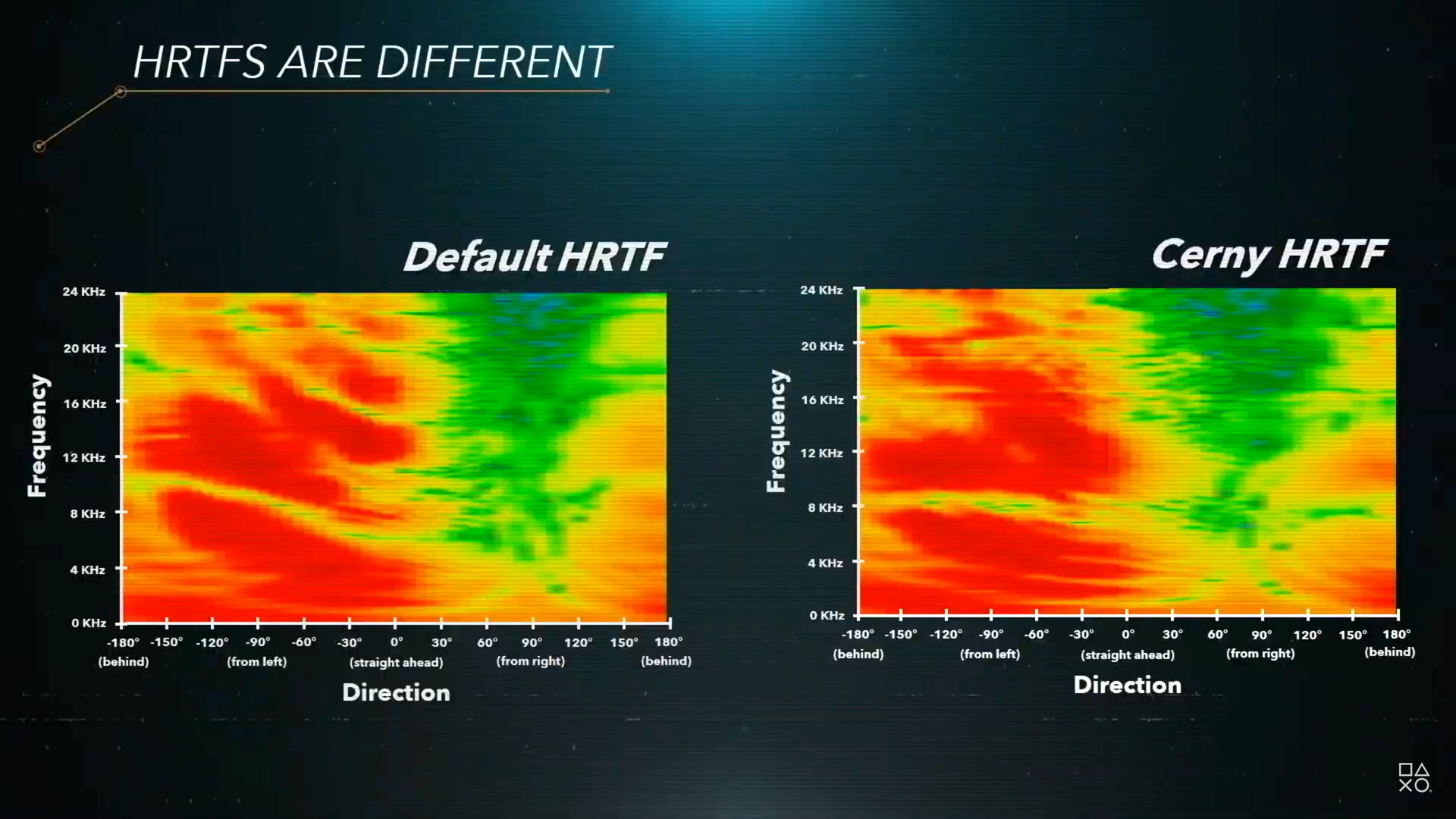

Spectral modeling of an HRTF between a general listener and a specific listener.

You might have heard about HRTFs, or head-related transfer function, from Mark Cerny’s GDC presentation on the PS5. HRTFs are a type of transformation of audio that accounts for the way audio from different positions around the head sound in each ear, while also accounting for the shapes of ears. It turns out the shape of an ear, and how it absorbs and reflects incoming sound, helps people differentiate a sound's point of origin in space. In particular, this kind of audio transformation really helps sell the idea of a sound source position in lateral space. That is to say, above or below your head, as well as around it. It is a particularly adaptable, and complex form of audio transformation that needs dedicated computational hardware that is good at this type of math in order to render it in real-time. What you get is a more convincing surround sound effect that provides better simulation of sound positioning around you heard and above and below it.

Convolution reverb

This type of technology was proudly announced alongside the Unreal Engine 5 announcement. However, this technology has been around for decades in audio mastering studios. It’s a type of audio transformation that emulates the acoustics of an environment. It’s similar to the DSP settings you might have on an audio receiver (e.g. Music Hall, Club, Symphony Hall, etc…), but even more complex. Convolution reverb is a complex way of measuring the way sound travels in a 3D space. Think of a subway tunnel, and think of how your footstep sounds reflect off of the hard walls, and certain frequencies of that footstep sound get absorbed by softer materials. This is what convolution reverb is, but in application with video games, it also considers what each ear might hear from the same sound. A sound originating from the left side of you may hit your right ear at a small delay and may catch an echo at a different time than the left ear. The major advancements today are largely from the more advanced binaural microphone setups used to record convolution reverb(to simulate how a head with two listeners would hear a sound). Unreal 5 can take a recording and analyze the audio to create a convolution reverb algorithm that can simulate the way a noise would sound in the recorded environment.

Ambisonics

Ambisonics is another type of sound processing that has been around for a long time. Ever since you could place headphones on your head, recording artists have been playing with the idea of panning audio across the channels to give sound a more 3-dimensional presence. The same thing has been used in video games for decades, albeit in a fairly rudimentary way. It hasn’t been until both binaural recording, and algorithms to more accurately simulate how audio from a sound emitter would hit both ears differently, that the ambisonics became more convincing. It more broadly ties both HRTFs and convolution reverb together. All three technologies can work together to transform audio into a pattern of reflection, sound transformations over time and space, and how audio hits each ear/listener at different times to create a truly convincing 3D aural environment. The convolution reverb presents sound as it would travel through a 3D space. The HRTF simulates how sounds positioned around a head would sound depending on the shape of the ear and the location of the sound emission. Ambisonics accurately simulate how sound travels to a listener based on its position around, above, and below it.

Ray-traced Audio

Did you think that ray-tracing was only used for graphics? Ray-tracing is used for all types of calculations to determine distance from objects or to get very precise virtual measurements. With audio, ray tracing can be used to determine how a sound in a virtual environment might reflect and absorb within a virtual environment. More specifically, ray-traced audio can be used to make HRTFs, ambisonics, and convolution reverb transformations even more accurate. Imagine being in a large cave, but hearing how the sound reflecting off of a wall changes as you get closer and closer to it. You could emulate how something soft absorbs sound. The same can be said for echoes. You might think that we can do all of these things in games already, but like reflections, you could only emulate or use tricks to give the effect without really simulating the physics of it. Ray-traced audio has the potential to enhance how we hear games. Games that really rely on audio (think Amnesia or Layers of Fear) could run with audio rendering and create really amazing atmospheric effects. Ray-traced audio will turn everything we already can do with audio transformations and turn it up to 11.

Adaptive Audio Advancements

Adaptive audio systems are the audio systems most game developers already use. They’re usually third-party software tools, like Wwise or FMOD, that use software to create 3D environments, and methods for audio to react to system triggers and events. Not only do these tools make it easier to set up the aural landscape for a 3D game, but they also make it easier to trigger layers and loops of background music to have music adapt to the gameplay on-screen. This allows the developers to focus more on crafting an interesting musical landscape than focusing on the tech.

While these systems are really good at adapting to macro changes (see: level events, boss battles, fight scenes, etc…), they still can’t respond fast enough to small changes like a finishing blow, or a particularly harrowing jump. Advancements in how games can trigger events to bring adaptive music to moment-to-moment actions will open up new creative possibilities. Additionally, extra processing power will more seamlessly transition music, and even AI algorithms may make it possible to generate music on the fly.

The Future of Game Audio

We’ve covered a lot of cool and exciting audio technologies. By this point you might be familiar with what each technology can do, but what does that mean for games. The most obvious advancement will be the realistic rendering of 3D audio scapes. They will be so much more accurate and enveloping aural scapes presented in this next generation. The most impressive changes will be in the form of design changes that will be enabled by accurate audio simulation. Imagine a horror game that forces you to rely on how sounds bounce around a dark room to allow you to safely navigate it. This was explored in games like Hellblade, but with more audio processing power, the experience could be transformative. Clever puzzles could rely on listening to objects move through space and hit different materials to determine an outcome. In the same way ray-traced reflections can simulate a real reflection to open novels way of game design, so too can ray-traced audio.

We’re very psyched about the next generation of audio enhancements, and we cannot wait to see how talented engineers and designers advance the way we think of audio in games.